Topics Discussed:

- Containers

- Kubernetes components

- Managed K8s

- EKS

- Upgrade EKS

- Security

- Cluster Auto scaler

Containers: Containers bundle your application’s code, libraries, configuration files, and everything it needs to run. This guarantees the same behavior across different environments (development, testing, production). No more “it works on my machine!” issues.

Why container orchestration:

- Automated Deployment of Containers: Streamlined deployment of containers, ensuring efficient and consistent application delivery.

- Redundancy and High Availability: Redundant infrastructure ensures uninterrupted service availability even in the event of failures, enhancing application resilience.

- Scalability Based on Demand: Dynamic scalability based on changing demands, allowing applications to handle fluctuating workloads effectively.

- Load Balancing: Optimal distribution of traffic across multiple containers, preventing overloading and maximizing resource utilization.

- Health Monitoring: Continuous health monitoring of containers, identifying and addressing issues promptly to maintain service uptime.

- Service Discovery: Facilitates the discovery and communication between containers, enabling efficient service interactions within the application.

- Enhanced Security: Robust security measures protect containerized applications from potential threats and vulnerabilities.

Kubernetes :

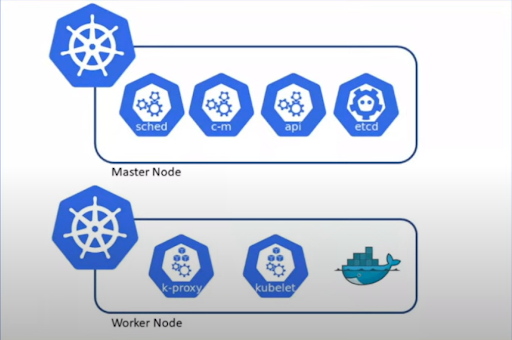

Master Nodes: The primary function of master nodes is to manage worker nodes. The master node is now responsible for running a set of Kubernetes processes, which ensure the smooth functioning of the cluster. These processes are collectively called the control plane.

Components of Master Nodes:

Kubernetes API Server: The Kubernetes API Server serves as the entry point for the cluster. When users issue kubectl commands, they are directly communicating with the API Server. The API Server is responsible for authenticating users, validating requests, retrieving data from etcd, and interacting with the scheduler and kubelet.

etcd: etcd is a distributed, reliable key-value store that stores the state of Kubernetes objects. This includes information such as which pods are running or stopped, and which pods are scheduled on which nodes.

Kubernetes Scheduler: The Kubernetes Scheduler is responsible for scheduling pods on available nodes. It considers factors such as resource requirements, limits, taints and tolerations, and node selectors when making scheduling decisions.

Kubernetes Controller Manager: The Kubernetes Controller Manager is responsible for maintaining the desired state of the cluster. Various controllers (such as the ReplicaSet controller and the Deployment controller) continuously monitor the state of resources and make adjustments to ensure that they match the desired state.

Components of Worker Nodes:

Kubelet: The kubelet is an agent that runs on each worker node. It communicates with the API Server and ensures that containers are running in a pod as specified in the pod manifest.

Kube Proxy: Kube Proxy maintains network rules on the worker nodes. It helps manage network communication to and from pods within the cluster.

Manifest Files: Manifest files are configuration files that describe the desired state of Kubernetes resources within a cluster. These resources can include pods, services, deployments, replica sets, config maps, and more. Manifest files are typically written in JSON or YAML and are used to define how Kubernetes should create, configure, and manage these resources.

Challenges of Self-Managed Kubernetes Control Plane:

- Ensure high availability with multiple master nodes.

- Scale the control plane as needed to handle increased load.

- Maintain continuous operation of etcd and regularly perform backups.

- Apply security patches to the control plane to keep it secure.

- Manage the master nodes and monitor their resource usage and health.

- Update the Kubernetes version carefully to ensure a smooth transition.

Managed K8s

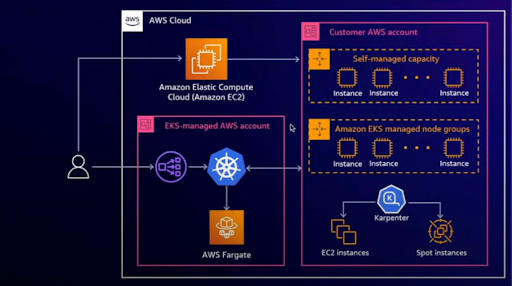

- Amazon Elastic Kubernetes Service (EKS) is a managed Kubernetes service by Amazon Web Services (AWS) that simplifies deploying, operating, and scaling Kubernetes clusters.

- EKS is integrated with AWS services like Amazon Elastic Block Store (EBS), Amazon Virtual Private Cloud (VPC), and Amazon Elastic Load Balancing (ELB), enhancing functionality and security. It also seamlessly integrates with AWS CloudFormation, enabling infrastructure-as-code (IaC) practices.

- Advantages of using EKS include managed infrastructure, high availability, scalability, security, and cost efficiency.

Deploy EKS anywhere:

- AWS Cloud

- Customet operated infrastructure

- AWS outposts

- Aws local zones

- Aws wavelength

EKS Control plane Architecture

- The application is deployed across three availability zones (AZs) to ensure high availability.

- A network load balancer is used to distribute traffic evenly across the application’s instances.

- The master node of the application is hosted on an Amazon Elastic Compute Cloud (EC2) instance.

- Etcd, a distributed key-value store, is used to store the application’s configuration data. Etcd instances are deployed in all three AZs to ensure data redundancy.

EKS Data Plane (Worker Nodes):

- The worker nodes are managed by our team.

- We ensure that your versions are consistently up to date, eliminating any concerns.

- If you desire a more customized approach, you have the flexibility to employ your own custom AMIs.

AWS Managed Worker Nodes:

- AWS assumes responsibility for managing worker nodes, alleviating any concerns.

AWS Fargate:

- This serverless solution eliminates the need for managing servers, ensuring a seamless experience.

High Level Architecture

Cluster Creation Processes:

- AWS Management Console

- AWS Command Line Interface

- Infrastructure as Code tools

- Eksctl Command Line Interface

Cluster Creation with eksctl

Eksctl is a command-line tool that automates the creation and management of Amazon Elastic Kubernetes Service (Amazon EKS) clusters. The tool enables the user to quickly and easily create an EKS cluster in the specified region with the desired configuration.

In this example, the command will create a new EKS cluster named “eks-test” in the “us-east-1” region, with Kubernetes version 1.27. The cluster will also have a managed node group named “managed-node” with t2.micro instances and 2 nodes.

eksctl create cluster –name eks-test –region us-east-1 –version 1.27 –nodegroup-name managed-node –node-type t2.micro –nodes 2

EKS Addons

EKS Addons are software components that can be installed on an Amazon Elastic Kubernetes Service (EKS) cluster to extend its functionality. Some commonly used addons include:

- Kube-proxy: This addon is responsible for managing network traffic between pods and external services, such as load balancers.

- CoreDNS: This addon provides DNS resolution for pods within the cluster.

- VPC – CNI: This addon enables pods to access resources in a Virtual Private Cloud (VPC) without the need for an external gateway.

- EFS CSI Driver: This addon provides persistent storage using Amazon Elastic File System (EFS). EFS is a fully managed file system service that offers scalable, reliable, and secure storage for containerized applications.

- EBS CSI Driver: This addon provides persistent storage using Amazon Elastic Block Store (EBS). EBS is a block-level storage service that offers high-performance, low-latency storage for containerized applications.

These addons can be installed on an EKS cluster using the AWS Command Line Interface (CLI) or the AWS Management Console. Once installed, the addons can be managed through the EKS API or the AWS console.

Upgrading worker node

The process of upgrading worker nodes encompasses their replacement with more robust iterations, aiming to augment performance, scalability, and security. It entails provisioning, data migration, workload redistribution, health checks, and the subsequent replacement of obsolete nodes. Regular maintenance practices are essential to safeguard optimal operational conditions post-upgrade.

Pod Limits on node

We can check how many pods are suite for the nodes

https://github.com/awslabs/amazon-eks-ami/blob/master/files/eni-max-pods.txt

Security

- AWS IAm Authenticator

- ECR Scanning

If we need to give access to the developer to access the cluster create an IAM user and give access

IAM Authenticator for EKS

IAM Authenticator simplifies authentication to EKS clusters by leveraging AWS IAM, reducing the need for traditional providers and the overhead of certifications. It provides compatibility with IAM roles and RBAC for fine-grained access control.

Steps to Get Started:

- Add user ARN to the system masters group for admin access.

- Create a deployment role and role binding for developer access.

Ingress : An ingress is an object that manages external access to service within your cluster it allow you to define routing rules for different services based on url and path

Auto scaling in k8s

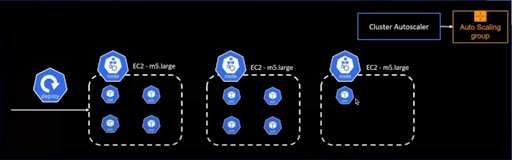

Cluster auto scaler

- It will check the nodes can afford new pods

- New node will create and scheduler can schedule it to new nodes

Challenges of using cluster autoscaler

If we have 9 pods and 8 pods are provisioned and 9th pod needs to schedule the first two nodes have no space it will provision new node but the issue is we will have only one pod to deploy rest of 3 space left there resource wastage to overcome this issue we can use karpenter. Let us assume a scenario wherein we have nine pods, out of which eight pods have been provisioned. Now, the ninth pod needs to be scheduled, but the first two nodes do not have sufficient space to accommodate it. Thus, a new node is provisioned. However, this creates a situation where only one pod is deployed, leaving three vacant spaces, which results in resource wastage. To address this issue, an alternative solution, such as utilizing Karpenter, can be considered.

Karpenter

The process will select the appropriate node which is compatible with the pods

Monitoring and logging in Kubernetes

Monitoring and logging are essential for effectively tracking clusters health and understanding applications behavior.

System and application logs

Analysis of system logs from Kubernetes components and application logs provides insights into worker nodes and applications.